- Date(s): April 2025 - November 2025

- Topics: Virtual Reality (VR), Electroencephalography (EEG), Eye Tracking, Human-Computer Interaction (HCI), Traffic Models, Crowd Models

- Models: Intelligent Driver Model (IDM), A*/A-Star, Reciprocal Velocity Obstacles (RVO), Human Subjects Experimentation, Surveys & Questionnaires

- Hardware: Meta Quest Pro, Muse S, Raspberry Pi 4B

- Software: Unity (Game Engine), Mind Monitor, scrcpy, C#/CSharp, Python, OpenCV

- Collaborators:

- Kaishuu Shinozaki-Conefrey (Website, krs8750@nyu.edu)

- Dr. Paul M. Torrens (Profile, Website, Google Scholar, torrens@nyu.edu)

- Links: GithubPDF (Full)DOI (Full)PDF (Short)DOI (Short)

StreetSim V2.0

Introducing brain activity and eye tracking to urban simulation

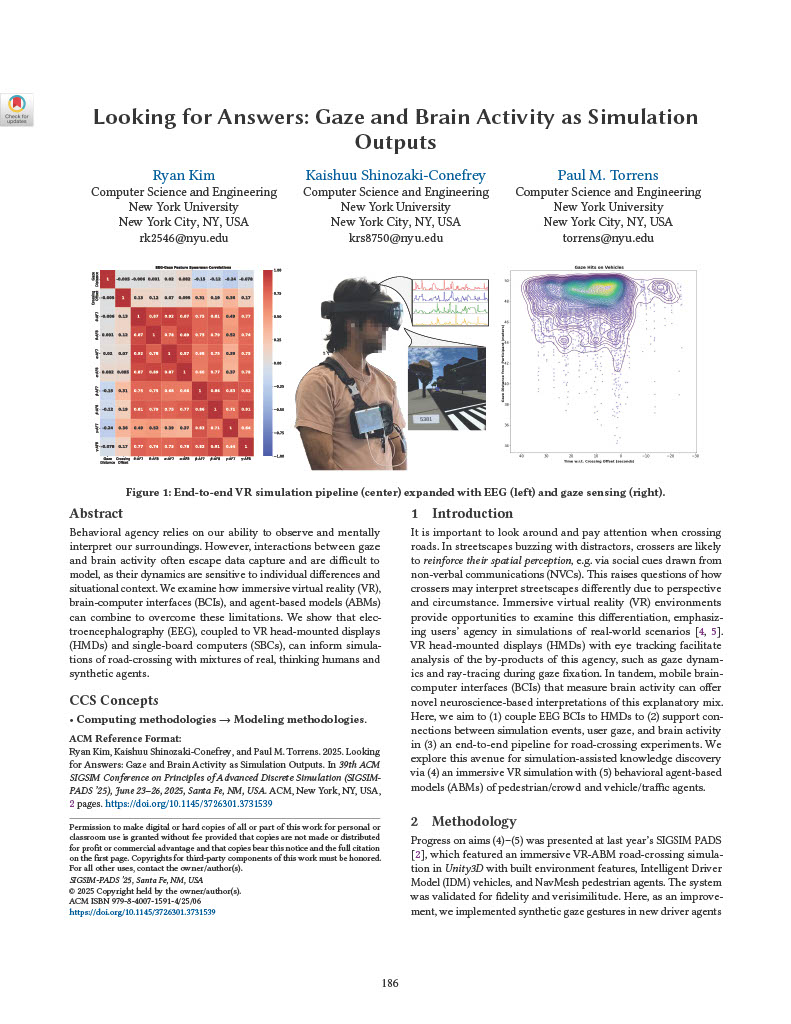

I conducted this short study to determine whether I could identify patterns between people’s gaze behaviors and brain activity. I had already created a VR-based simulation testbed in a previous research report I published, so I felt that we could use it here. I had to use three devices for this analysis: a Meta Quest Pro (Note: these devices are discontinued!), a Muse S brain-computer interface (BCI), and a Raspberry Pi 4B. There are some reasons why we had to use these devices in particular:

- The Meta Quest Pro comes with an in-built eye tracker. An interesting… quirk about this device is that there’s no official source for the sample rate of its eye tracker. This paper cites the sample rate to be 90Hz, but online discussions (as well as my own, albeit clunky, testing) argue a 72Hz sample rate. I eventually just downsampled the rate to 60Hz via custom scripts.

- Unity was the game engine that ran the simulation. I had the simulation side-loaded as a standalone application into the Meta Quest Pro. Because I had full control over the simulation space, I could record the space-time trajectories of all entities in the simulation via custom code, including all vehicles, pedestrians, and the user themselves.

- The Muse S is a nifty BCI that allows me to measure the Electroencephalograms (EEGs) of its wearers. It only has five electrode channels: two along the frontal lobe (AF7, AF8), two along the temporal lobe (TP9, TP10), and a reference node (Fz).

- The Raspberry Pi 4B had to be work separately and connected to the Meta Quest Pro via USB-C. This single-board computer was required for one specific purpose: recording the eye display shown to the MQP wearers. The MQP comes with its own recording system, but it has issues - it could potentially reduce the FPS of the simulation, and I needed the raw footage for gaze analysis anyways.

The idea here is that I throw people into our VR road-crossing simulation and simultaneously measure users’ EEG, gaze behavior, trajectories, and visual fields using the Muse S, Meta Quest Pro, and Raspberry Pi. The recordings are natually going to be asynchronous, so to fix this I also recorded the frame number of the simulation and mapped them to all data samples. This meant I could align the EEG results with gaze activities and simulation frames.

What We Did

I ended up discovering some statistically significant correlations in the Theta, Beta, and Gamma EEG frequencies with users crossing attempts and gaze distances (the distance between users and their gaze fixation targets. These are pretty cool to identify, because we know that Theta frequencies are associated with navigational skill and difficulty - this means that people were, to some extent, engaging in their spatial cognitive faculties while in the simulation. Furthermore, Beta and Gamma frequencies meant they were really concentrating and processing the visual information they were provided.

I consider this as a preliminary exploration - we still are missing some comparisons with real-world equivalent scenarios and I’m pretty sure there’s other factors in crossing that we missed out. But like to think that this is a promising first step in further understanding people’s cognition when walking around on streets.

There are two reports tied to StreetSim V2.0:

- A short paper published at SIGSIM PADS ’25: Download Manuscript PDF (1.15 mB)

- A full paper published at SIGSPATIAL GeoSim 2025: Download Manuscript PDF (5.85 mB)

- Torrens, P. M., Kim, R., & Shinozaki-Conefrey, K. (2025). Experiential geosimulation. Proceedings of the 8th ACM SIGSPATIAL International Workshop on Geospatial Simulation (pp. 12–20). New York, NY, USA: Association for Computing Machinery. URL: https://doi.org/10.1145/3764921.3770147, doi:10.1145/3764921.3770147

- Full PaperBibtex

- Kim, R., Shinozaki-Conefrey, K., & Torrens, P. M. (2025). Looking for answers: gaze and brain activity as simulation outputs. Proceedings of the 39th ACM SIGSIM Conference on Principles of Advanced Discrete Simulation (pp. 186–187). New York, NY, USA: Association for Computing Machinery. URL: https://doi.org/10.1145/3726301.3731539, doi:10.1145/3726301.3731539

- Full PaperBibtex